Seata分TC、TM和RM三个角色,TC(Server端)为单独服务端部署,TM和RM(Client端)由业务系统集成。 Seata-server 的高可用依赖于注册中心、配置中心和数据库来实现。

Server端存储模式(store.mode)现有file、db、redis三种(后续将引入raft,mongodb),file模式无需改动,直接启动即可,下面专门讲下db启动步骤。

注: file模式为单机模式,全局事务会话信息内存中读写并持久化本地文件root.data,性能较高;

db模式为高可用模式,全局事务会话信息通过db共享,相应性能差些;

redis模式Seata-Server 1.3及以上版本支持,性能较高,存在事务信息丢失风险,请提前配置合适当前场景的redis持久化配置.

下面主要介绍一下基于nacos注册中心和mysql 数据库的集群高可用的搭建和实现。

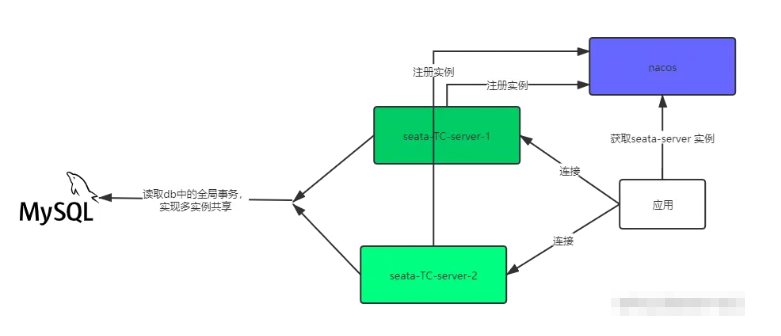

原理和架构图

seata-server 的高可用的实现,主要基于db和注册中心,通过db获取全局事务,实现多实例事务共享。通过注册中心来实现seata-server多实例的动态管理。架构图原理图如 下:

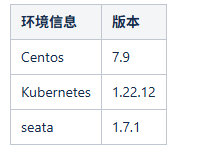

部署环境

部署步骤

1.创建seata_server库:

1 | create database seata_server character set utf8mb4 collate utf8mb4_general_ci; |

创建表:

1 | -- -------------------------------- The script used when storeMode is 'db' -------------------------------- |

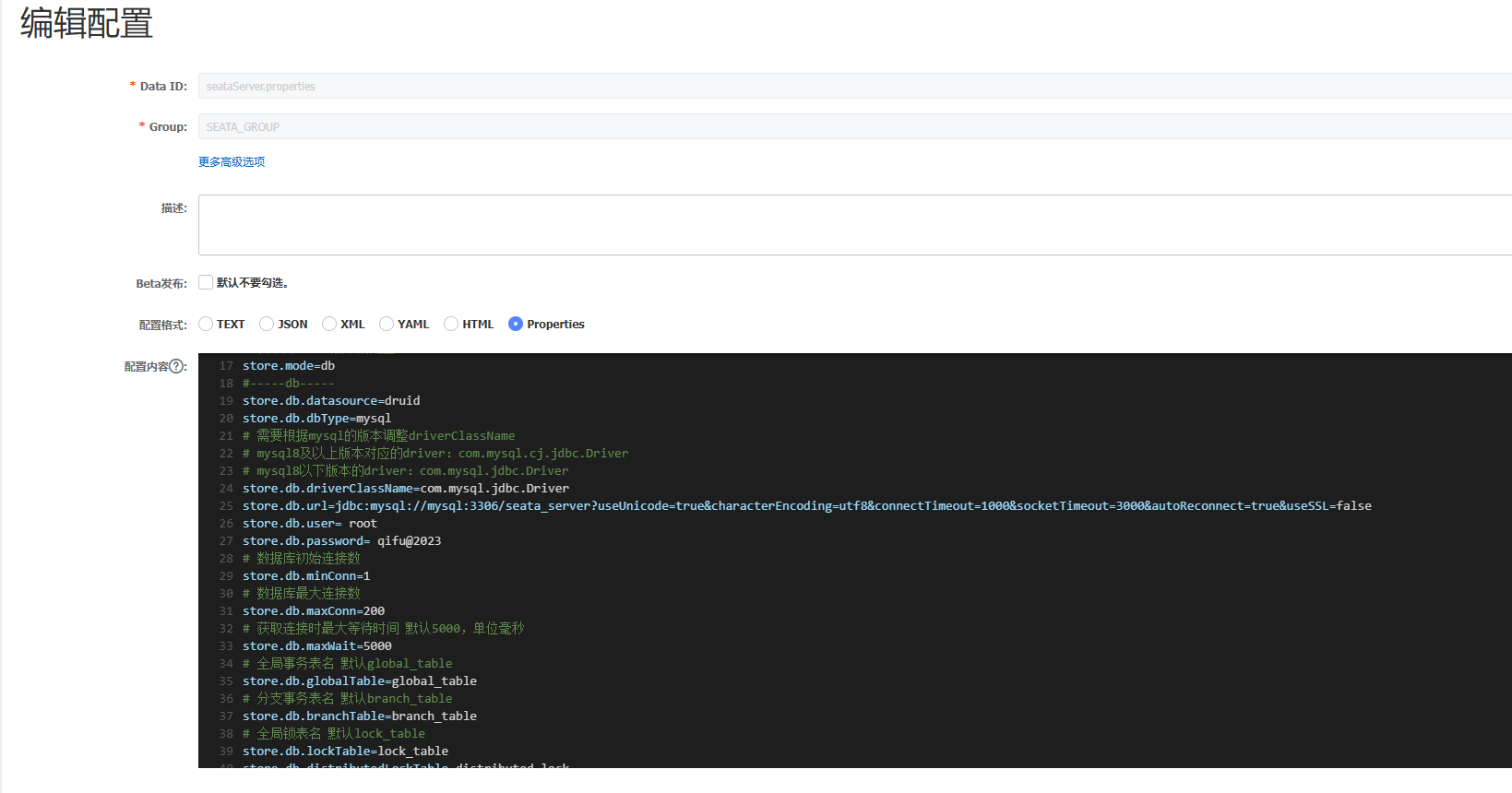

添加nacos配置:

配置文件如下:

1 | store.mode=db |

seata-server.yaml文件:

1 | apiVersion: v1 |

部署服务:

1 | kubectl apply -f seata-server.yaml |

nacos的服务列表:

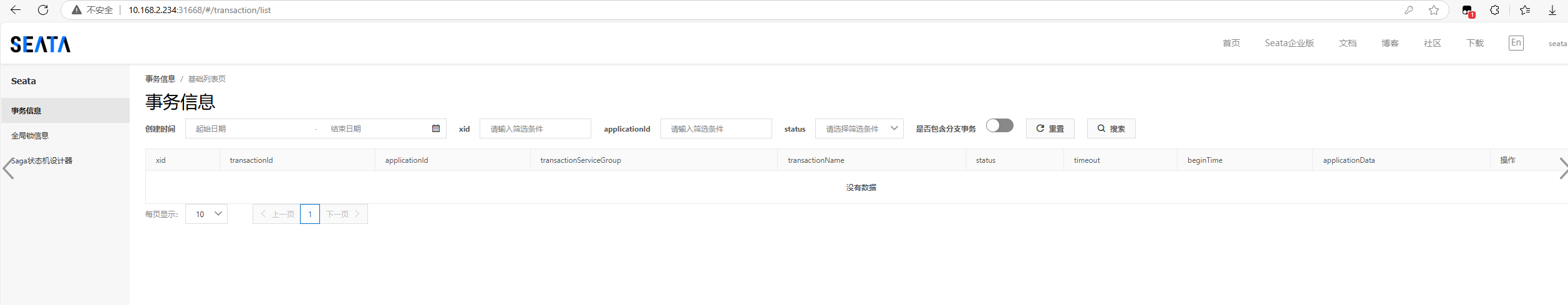

通过nodeport访问web界面:

遇到的问题

问题:

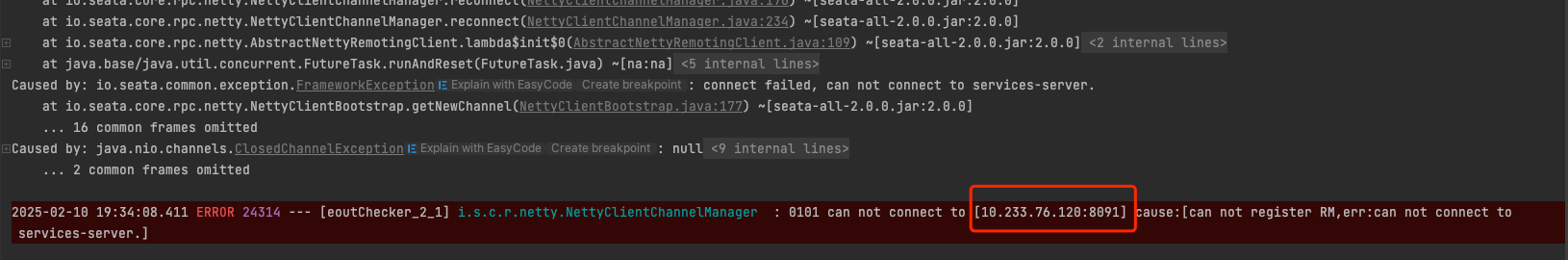

在k8s部署好seata服务后,本地连接会报错,连不上seata服务:

这个是因为本地是访问不了k8s集群的pod,即使把服务通过nodeport暴露出来也不行:

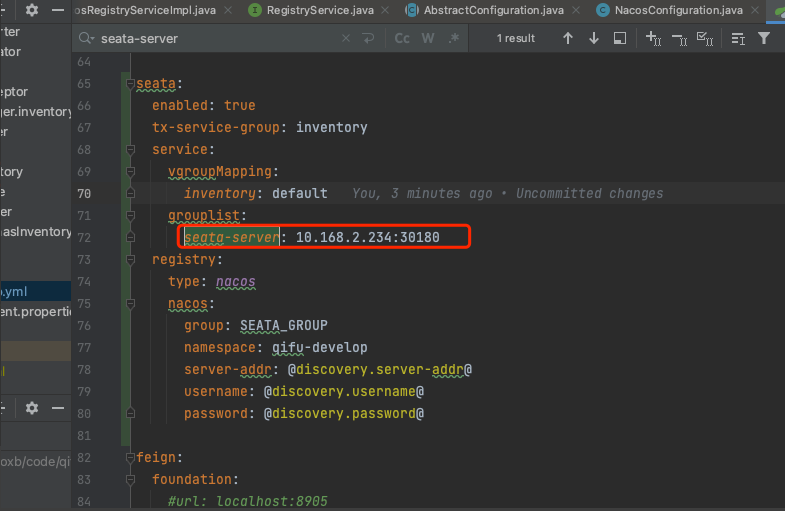

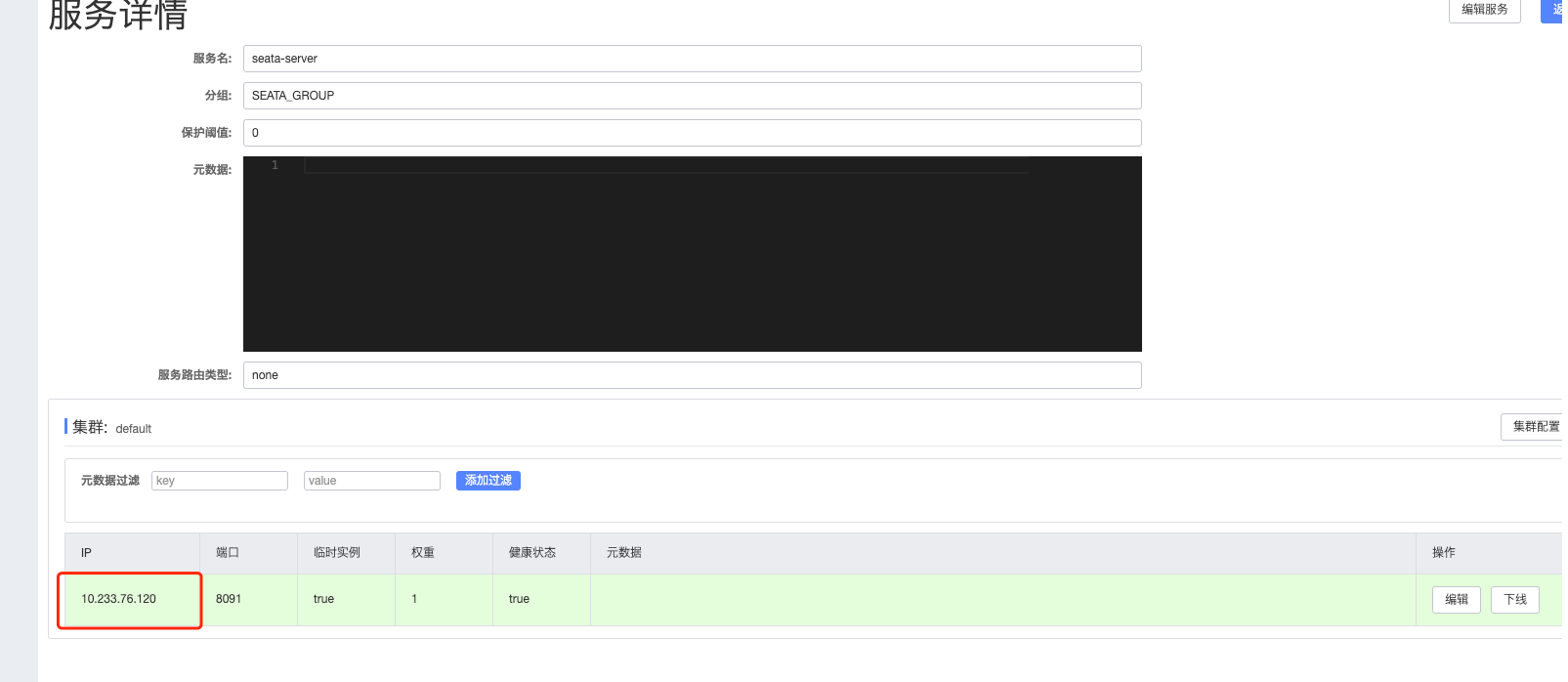

查看nacos的seata服务会发现ip是pod ip:

解决办法:

启动seata服务时指定ip为node主机的ip:

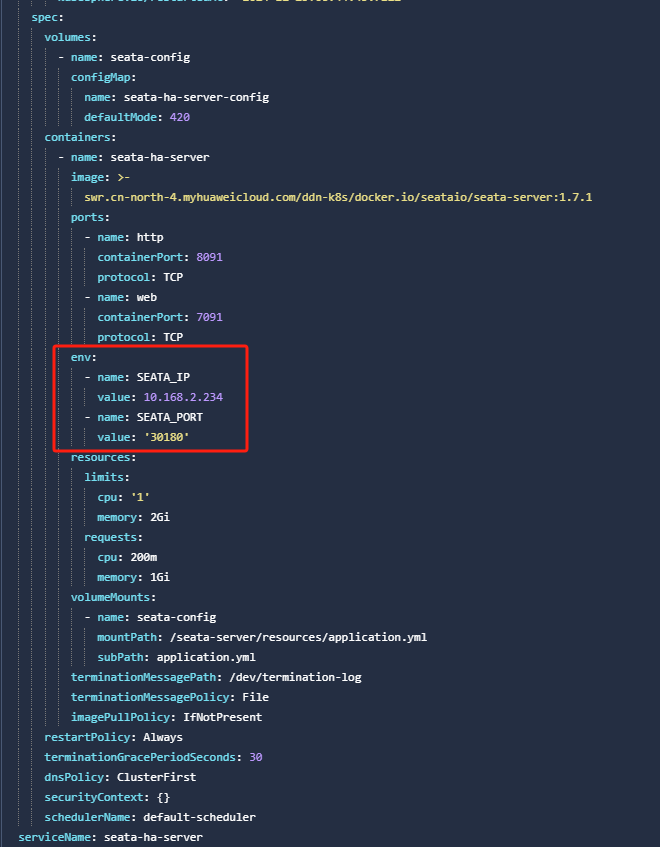

修改yaml文件,添加环境变量:

1 | env: |

如图:

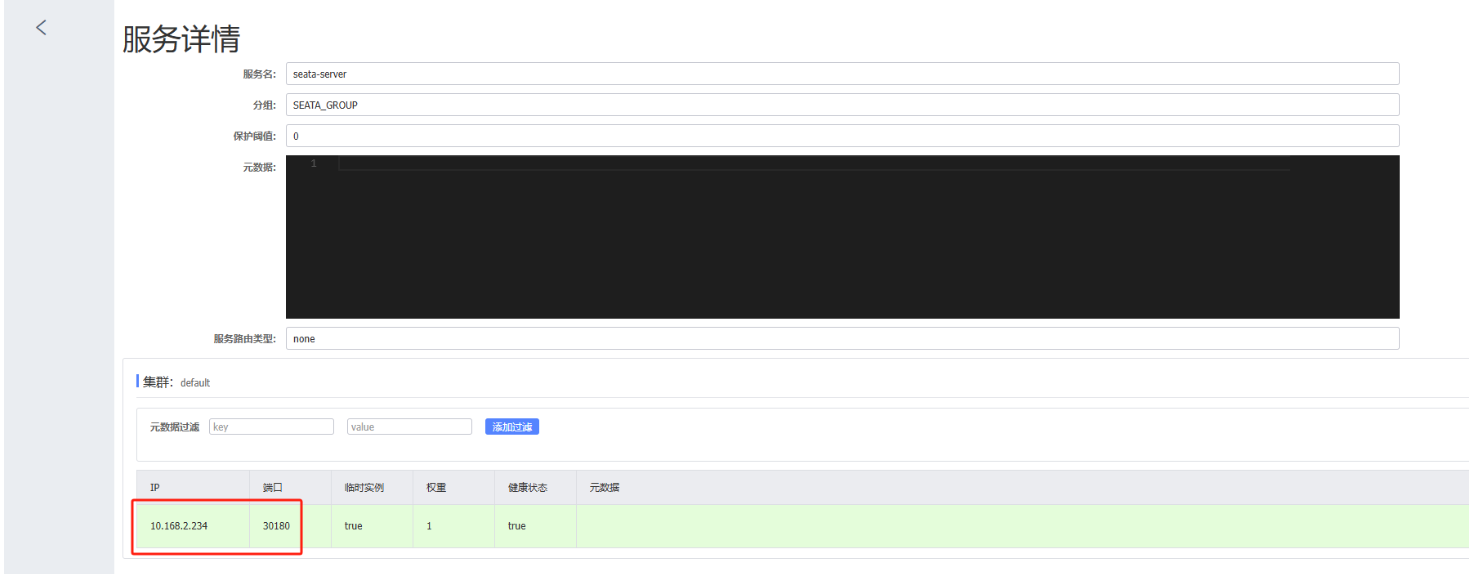

服务启动后查看nacos的seata服务ip:

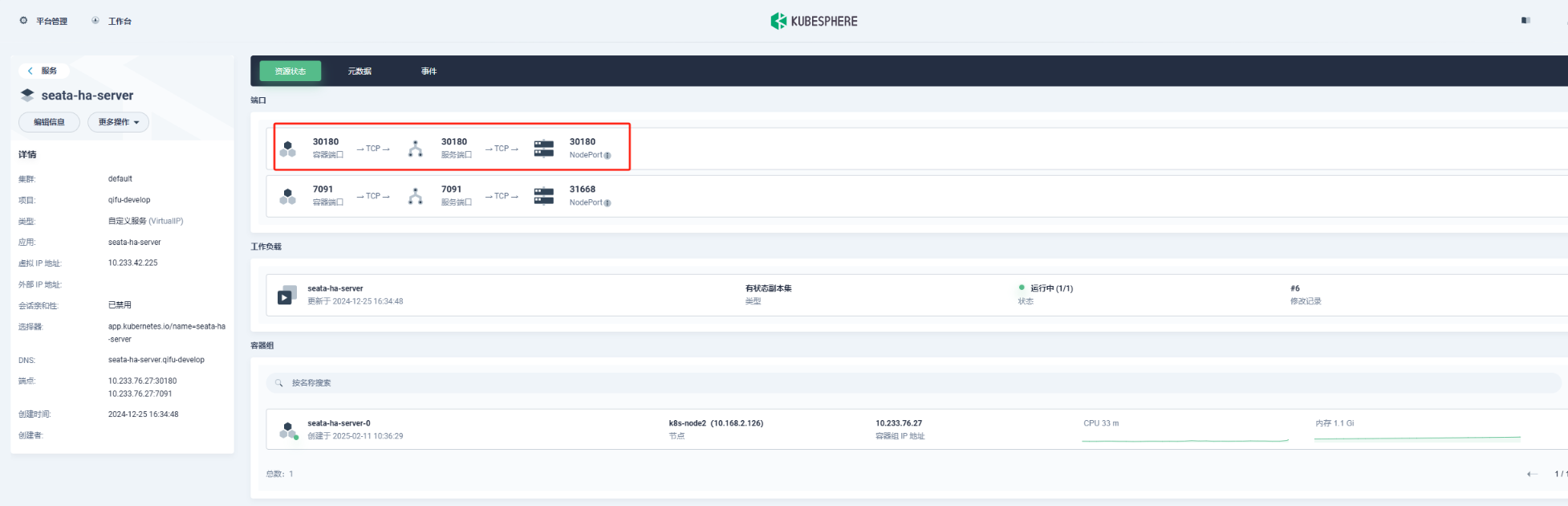

再写个service对象把端口暴露出来即可:

现在就可以在本地连接seata服务啦。